| tags: [ development Google ] categories: [Development ]

Google Cloud Platform: Function and Database

IOT House Data

I have been capturing house data for some time now. It has been a constant source of inspiration for me. Learning enough electronics to build the IOT Sensor nodes and then exploring various approaches for capturing the data and presenting it in a meaningful way.

Most of my work has been on-prem. In other words the data has been captured on a local Linux server that I purchased and have supported over several years.

Cloudy Cost

I’ve also been exploring various cloud services – mainly Amazon Web Services (AWS) and Google Cloud Platform (GCP). (Yes there are others…) I’m pretty sure that at some point in the future I will stop maintaining my own local server and move to a cloud service provider for my computing needs. However the biggest burden for me is the unknown cost associated with the cloud resources.

My local Linux box was a fixed cost when I purchased it 5 years ago. I incur a little bit of maintenance cost because I have to keep it patched and running. These costs are easy to calculate and are well within my budget. Especially when spread over multiple years.

On the other hand, the cost of starting up a cloud instance and paying the continued cost is a little bit opaque to me. The only way I know of to get an understanding of these costs is to try something out and see how much money I burn through.

Simple Setup

This leads me to my recent experiment on Google Cloud Platform. I wanted to understand GCP Cloud Functions and decided to capture some of my IOT data into a GCP SQL database (Posgresql). So I built a very simple (and insecure) pipeline for my local house IOT temperature data that would flow through a GCP Cloud Function and into an SQL database. Here is a truncated sequence diagram showing the main actors.

Within this sequence diagram, sensor data is published in my local MQTT server. A small MQTT listener is registered for this data. When the listener is notified that more data is available on the MQTT subject, it translates the IOT data into a JSON REST call and posts that to a GCP Cloud Function. The Cloud Function, which is written in python and listed below, parses out the JSON body and issues an SQL Insert into the GCP SQL Database. Most (not all) of the GCP Cloud Function are listed here for reference. This function was derived from the GCP help documentation

def mqttEventRecord(request):

global pg_pool

jbody = request.get_json()

if type(jbody) is dict:

#single data entry make it an array of one.

jbody = [jbody]

pass

for item in jbody:

databody = item['body']

if 'receivedt' not in databody:

databody['receivedt'] = datetime.datetime.utcnow()

print("MsgCount:[{}]".format(len(jbody)))

#lazy connect

dbServerName = '/cloudsql/{}'.format(CONNECTION_NAME)

if not pg_pool:

try:

__connect(dbServerName)

except OperationalError:

print("Fallback to local connection")

dbServerName = 'localhost'

__connect(dbServerName)

with pg_pool.getconn() as conn:

cursor = conn.cursor()

for item in jbody:

databody = item['body']

cursor.execute(DB_INSERT_EVENT_TEMPLATE, databody)

conn.commit()

print("Committing insert with [{}] messages".format(len(jbody)))

pg_pool.putconn(conn)

return str("Done.")

I used the GCP Cloud command line tool (gcloud) to re-plublish the function several times as I worked through revisions.

gcloud\

--project=$(PROJECT)\

functions\

deploy\

--env-vars-file=$(ENV_VARS)\

--region=$(REGION)\

mqttEventRecord\

--runtime python37\

--trigger-http

Getting a working prototype took about 4 hours.

Results

During the trial, I had 3 temperature & humidity sensors reporting. Each sensor was reporting 1 sample every 5 seconds. Over 48 hours I recorded ~90,000 measurements.

Example Result set

postgres=> select * from mqtt_event_v2 limit 10;

id | dbdt | did | version | temp | rhumid | receivedt

----+----------------------------+------+---------+-------+--------+----------------------------

44 | 2019-07-07 20:28:18.097017 | 0x08 | v0.2 | 77.56 | 37.67 | 2019-07-07 20:28:18.096017

45 | 2019-07-07 20:28:18.34267 | 0x03 | v0.2 | 68.36 | 47.7 | 2019-07-07 20:28:18.341665

46 | 2019-07-07 20:28:18.994779 | 0x07 | v0.2 | 80.82 | 38.38 | 2019-07-07 20:28:18.990413

47 | 2019-07-07 20:28:23.432878 | 0x08 | v0.2 | 77.54 | 37.68 | 2019-07-07 20:28:23.431615

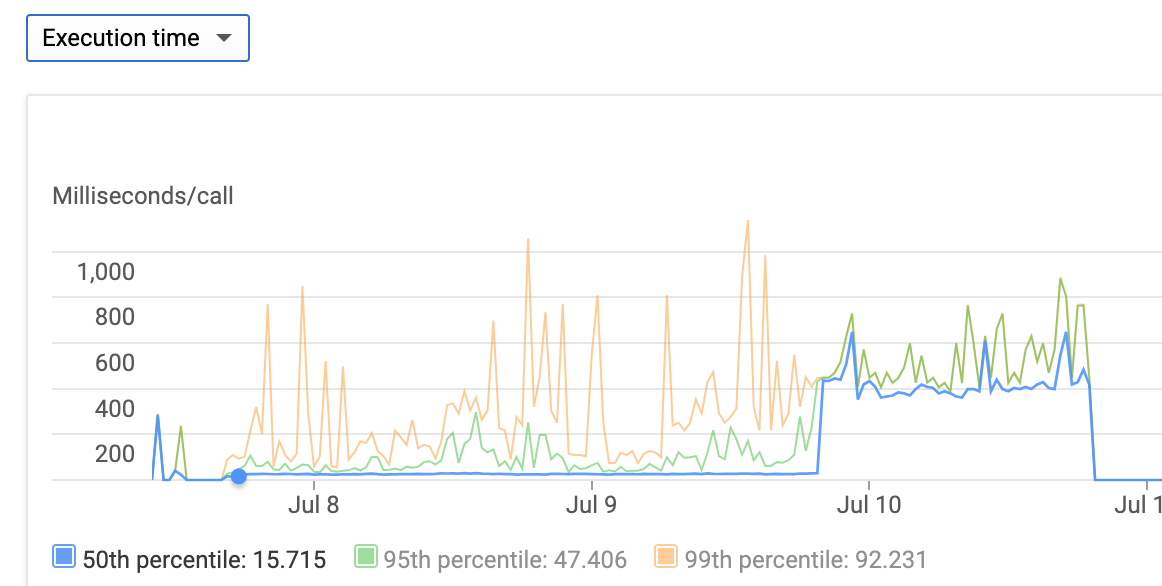

Using the Google Cloud Console web tools I was able to monitor the frequency of function calls and the execution time of each call. Here is a sample screenshot showing the function execution time over time.

Cost

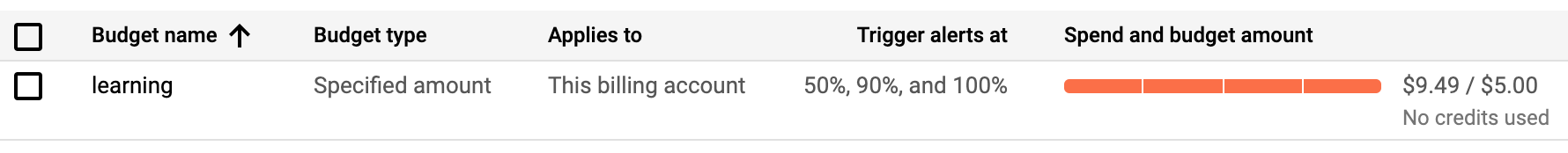

After running my experiment for a few hours an alert notified me that I had consumed 50% of my learning budget. I setup the learning budget alert after hearing stories of budget overruns from people learning AWS and GCP. It’s a good thing I did. I might not have caught the cost issue as early as I did.

Here is the current status of my budget alert. From my reading these alerts are purely notification. They cannot take action on your behalf to reduce or eliminate the source of cost, they just notify.

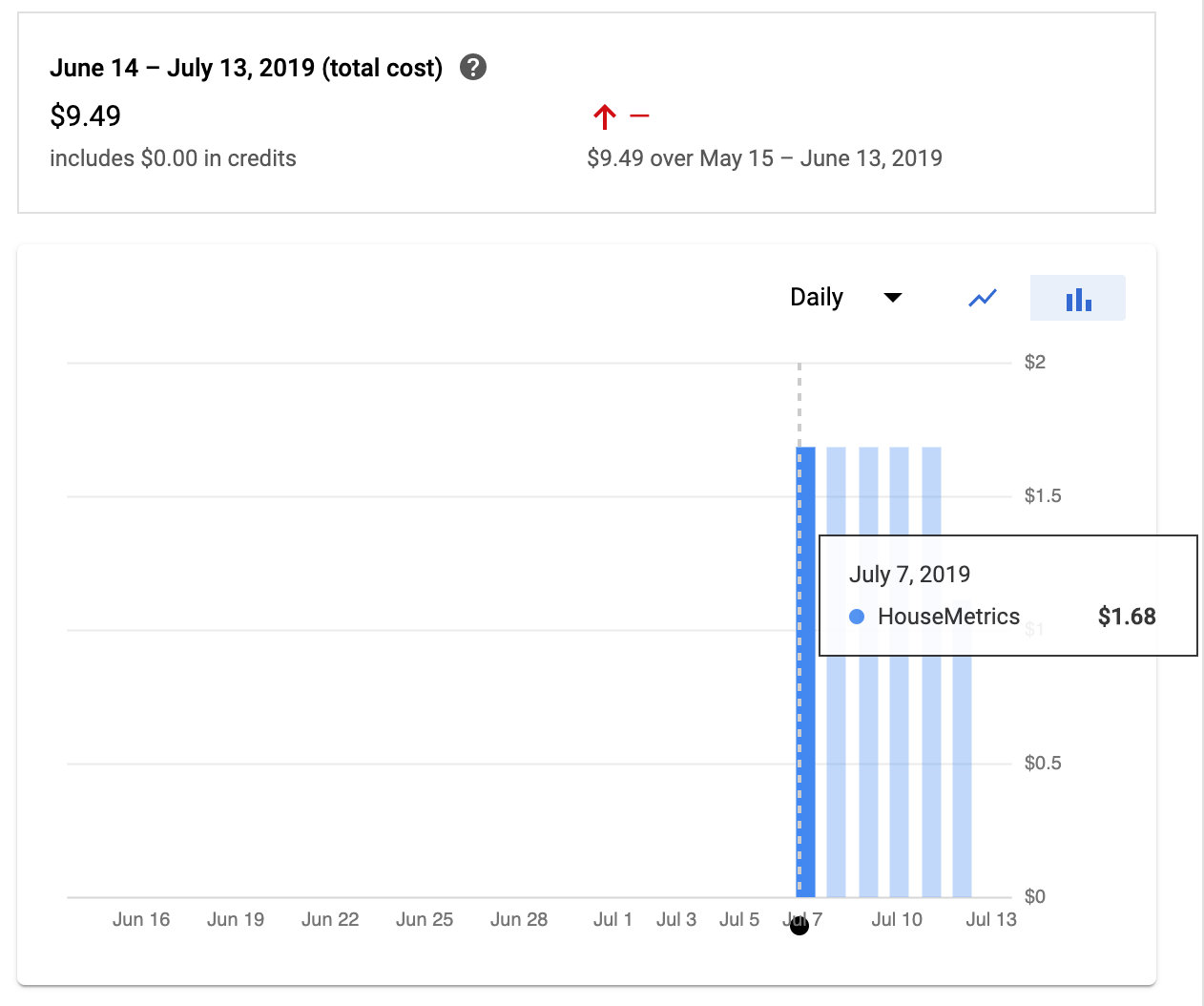

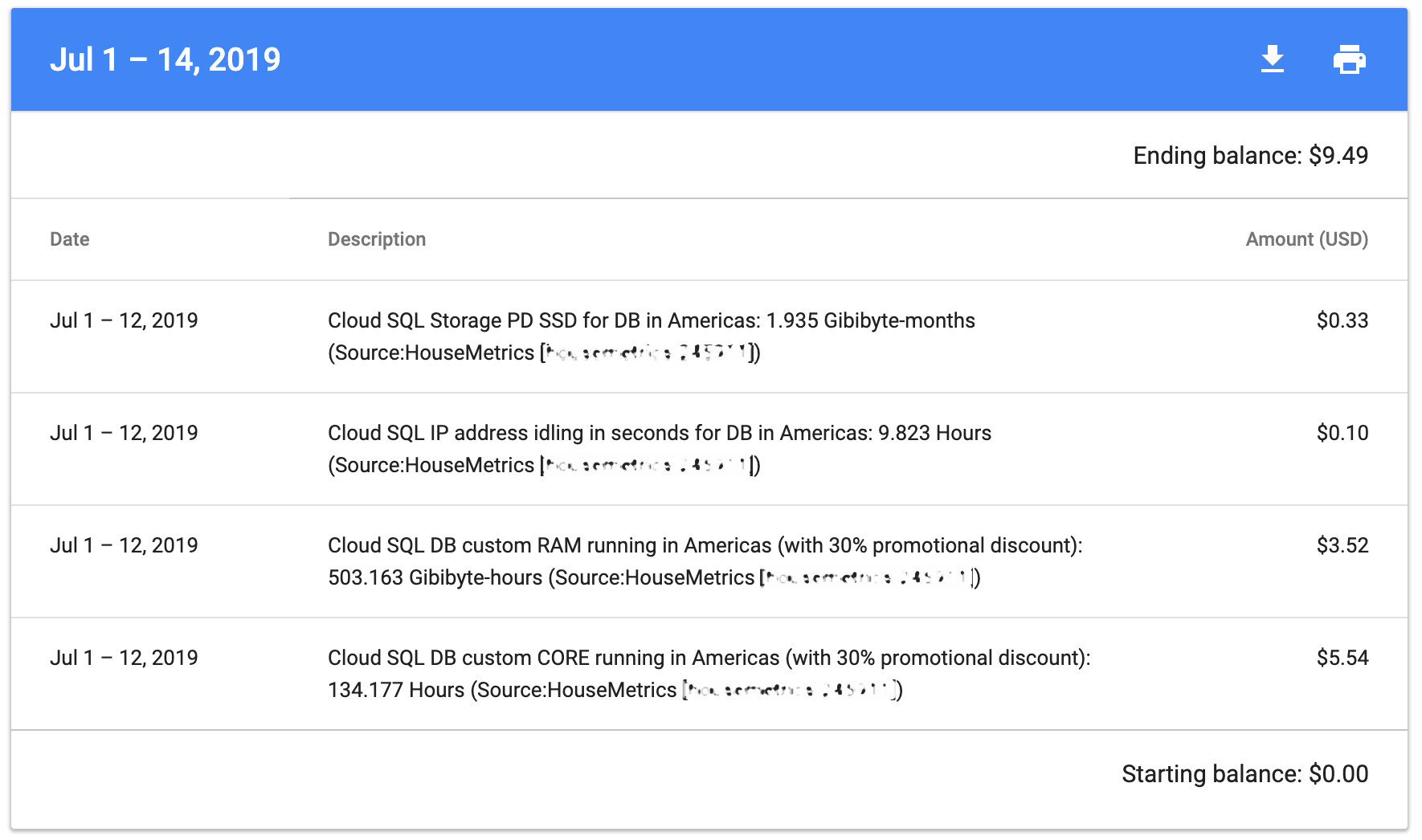

At first I assumed that the costs were coming from my Function, but after investigating the Cost Breakdown I realized that the cost was accumulating from the Google SQL instance.

I’ll be honest, I didn’t pay much attention to this as I was much more focused on getting the function working. I reviewed my configuration of the SQL instance and determined that I had chosen the most minimal recommending settings for all aspects of the SQL instance. 1 vCPU, Minimum Memory selectable (3.75GB). Although I did choose 10GB SSD over 10GB spinning Drive. Looking at the Cost Breakdown, the majority of the cost is coming from the use of a core as well as Memory to run the core.

Extrapolating this out, If I wanted to run this setup continuously, it would cost approximately $613 per year ($1.68 per day * 365 days = $613). Most likely more than that because in the cost breakdown notes, I see that I’m getting a 30% discount. So perhaps as much as $800 per year.

Summary

Running a Google function is very easy. Google functions appear to be simple handlers that can receive an HTTP request and perform some action based on that request. Any additional information to execute the function would have to come from some state repository – either a database or file.

Beware costs. Be prepared to adjust your architecture in order to take advantage of the lowest cost mechanism necessary for your task. Not sure what that would be in my case, but I’ll try a few options to see what works. Fortunately, I’m not under a time crunch to come up with a solution

Set budget alerts. Both AWS and GCP provide this capability and it can help avoid a nasty unexpected charge. Fortunately in my case, I was able to catch the cost before it became too much.

Finally, I’m not saying that the costs are wrong or anything. I full expected to spend some $ on the experimentation and I’m very happy to see the results. I know now that if I want to build some interesting cloud system, I should know that I must make at least $1000 in revenue per year in order to cover my costs.